Developing Biosensors using Photonic Crystal Fibres and Surface Plasmon Resonance (SPR)

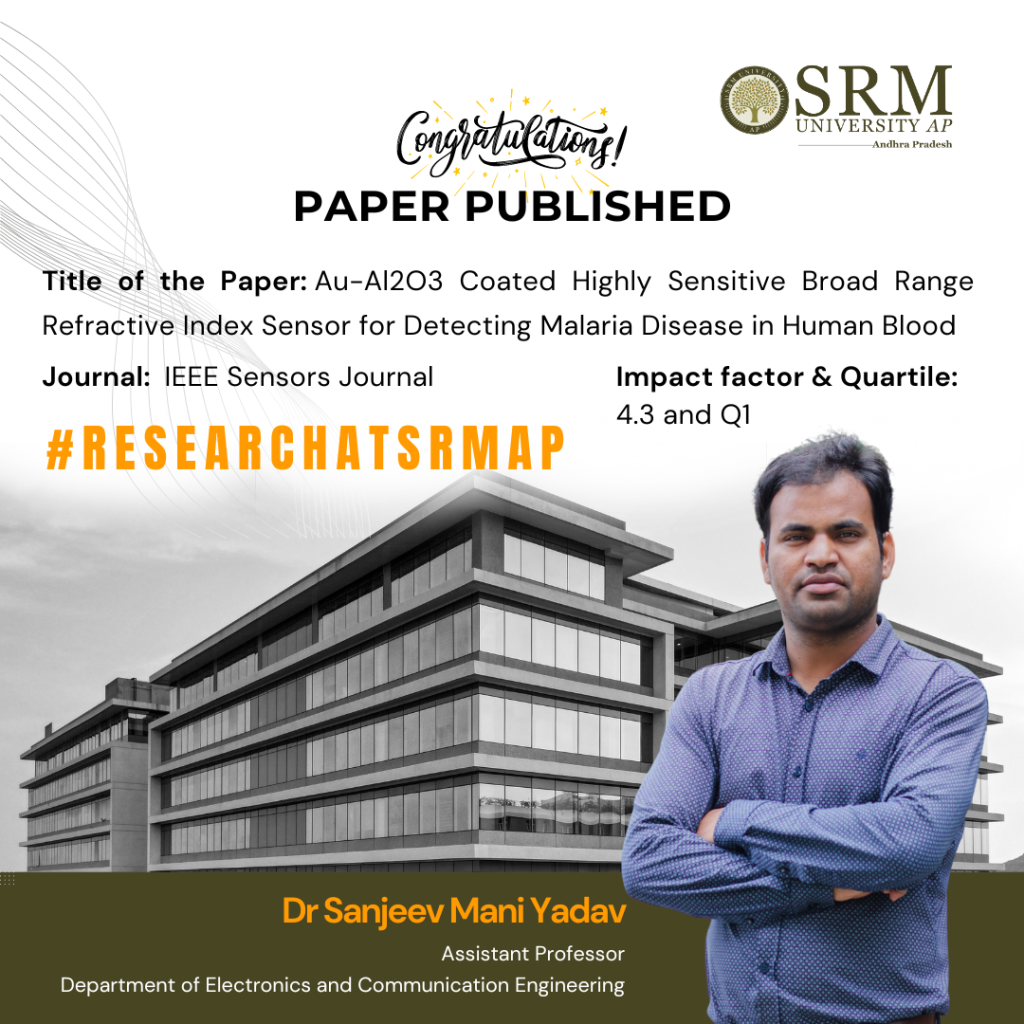

Dr Sanjeev Mani Yadav, Assistant Professor from the Department of Electronics and Communication Engineering, has published a cutting-edge research paper titled “Au-Al2O3 Coated Highly Sensitive Broad Range Refractive Index Sensor for Detecting Malaria Disease in Human Blood” in the IEEE Sensors Journal with an impact factor of 4.3. This research focuses on developing a highly sensitive biosensor using photonic crystal fibres and a technique called surface plasmon resonance (SPR) to detect changes in the refractive index, which is how much light bends when it enters a material. This biosensor can also detect malaria in the human body.

Abstract

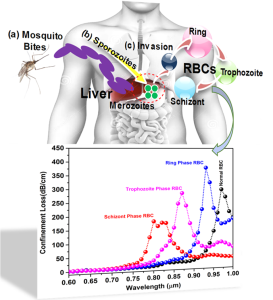

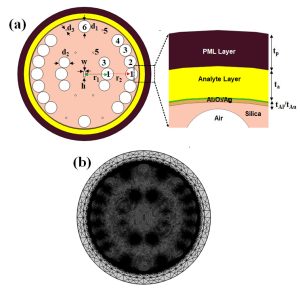

The paper represents the photonic crystal fibre-based surface plasmon resonance (SPR) biosensor for broad-range refractive index sensors along with the detection of malaria disease in the human body. α-Al2O3-Au dielectric-metal interface has been proposed to stimulate the free electron on the metal surface via evanescent to result in an SPR phenomenon. The proposed sensor shows a sufficient shift in resonance wavelength for the change in external RI from 1.32 to 1.40 for an optimised Al2O3/Au thickness of 50nm/12nm. The broad-range sensing applicability of the designed sensor shows a maximum sensitivity of 6000 nm/RIU when the external RI changes from 1.38 to 1.40. The detection accuracy of the designed sensor is reported to be 1.66×10-5 (RIU) and reported compatible in comparison to broad RI sensors. The proposed SPR sensor has been utilised to sense the malaria diseases in the human body by filling infected RBC samples on the dielectric-metal surface. The proposed study aids in detecting various stages of malaria-infected RBCs, including the Ring phase, Trophozoite phase, and Schizont phase, by measuring the shift in resonance wavelength. The sensor’s wavelength sensitivity varies across the phases: 5714.28 nm/RIU for the Ring phase, 5263.15 nm/RIU for the Trophozoite phase, and 5931 nm/RIU for the Schizont phase. The sensor exhibits the highest reported sensitivity among other biological sensors in this category. The proposed sensor fulfils all the requirements for a diagnosis of early malaria disease in the human body, along with its high sensitivity, low detection limit, and capability of sensing broad RI.

How does the sensor work?

1. Biosensor Basics: The sensor uses a combination of a special crystal fibre and a metal surface (a mix of aluminium oxide and gold) to create a reaction when light hits it. This reaction is called SPR and it helps in detecting tiny changes.

2. Detecting Changes: When the external refractive index (a measure of how light bends in a substance) changes, the sensor detects this by a shift in the wavelength (colour) of the light. The study found that the sensor is very sensitive to changes in the refractive index between 1.32 and 1.40.

3. Sensitivity: The sensor is incredibly sensitive, with a maximum sensitivity of 6000 nm/RIU (nanometres per refractive index unit). This means it can detect very small changes very accurately.

4. Malaria Detection: The sensor can also detect malaria by analysing infected red blood cells. Different stages of malaria infection (Ring, Trophozoite, and Schizont) cause different shifts in the wavelength, which the sensor can measure. The sensor’s sensitivity varies slightly with each stage but is consistently high.

5. High Performance: This sensor is reported to have the highest sensitivity compared to other similar sensors and meets all the requirements for early malaria diagnosis due to its high sensitivity, low detection limit, and ability to detect a wide range of refractive indices.

In essence, this sensor is a powerful tool for detecting both refractive index changes and malaria in the human body with high accuracy and sensitivity.

Practical implementation/Social implications of the research

The photonic crystal fibre-based SPR biosensor represents a significant advancement in medical diagnostics with wide-ranging practical applications and social implications. Its high sensitivity and accuracy in detecting malaria and potentially other diseases can lead to better health outcomes, economic benefits, and improved access to healthcare, particularly in regions that need it the most.

Dr Sanjeev Mani Yadav acknowledges Dr Amritanshu Pandey, Electronics Engineering Department, IIT (BHU) Varanasi, for his continuous support and guidance throughout this research.

- Published in Departmental News, ECE NEWS, News, Research News

A System for Visually Impaired Navigation

A dedicated team of researchers and professors have developed an innovative patent titled “System and a Method for Assisting Visually Impaired Individuals” that uses cutting-edge technology to significantly improve the navigation experience for visually impaired individuals, fostering greater independence and safety.

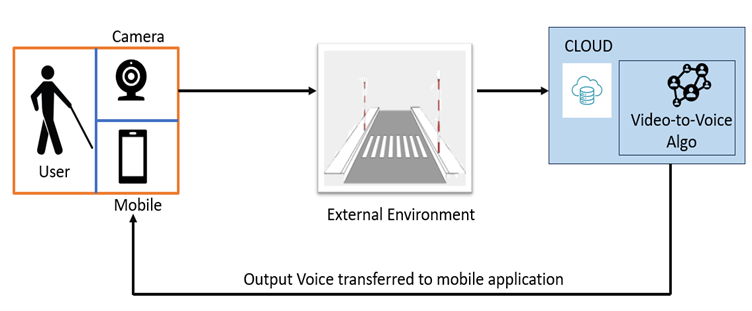

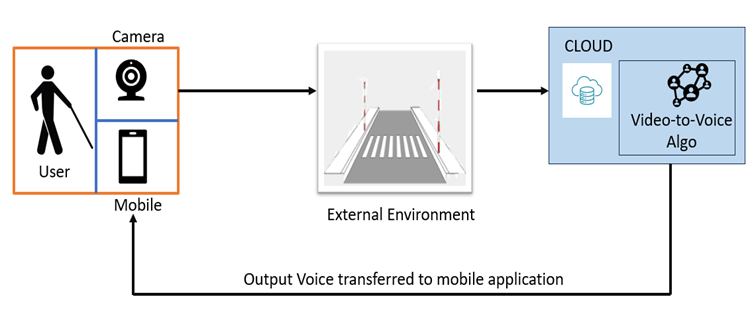

The team, comprising Dr Subhankar Ghatak and Dr Aurobindo Behera, Assistant Professors from the Department of Computer Science and Engineering, and students Ms Samah Maaheen Sayyad, Mr Chinneboena Venkat Tharun, and Ms Rishitha Chowdary Gunnam, has designed a system that transforms real-time visual data into vocals via a mobile app. It will utilise wearable cameras, cloud processing, computer vision, and deep learning algorithms. Their solution captures visual information and processes it on the cloud, delivering relevant auditory prompts to users.

Abstract

This patent proposes a novel solution entitled, “System and a method for assisting visually impaired individuals aimed at easing navigation for visually impaired individuals. It integrates cloud technology, computer vision algorithms, and Deep Learning Algorithms to convert real-time visual data into vocal cues delivered through a mobile app. The system

employs wearable cameras to capture visual information, processes it on the cloud, and deliver relevant auditory prompts to aid navigation, enhancing spatial awareness and safety for visually impaired users.

Practical implementation/Social implications of the research

The practical implementation of our research involves several key components. Firstly, we need to develop or optimise wearable camera devices that are comfortable and subtle for visually impaired individuals to wear. These cameras should be capable of capturing high-quality real-time visual data. Secondly, we require a robust cloud infrastructure capable of processing this data quickly and efficiently using advanced computer vision algorithms and Deep Learning Algorithms. Lastly, we need to design and develop a user-friendly mobile application that delivers the processed visual information as vocal cues in real-time. This application should be intuitive, customisable, and accessible to visually impaired users.

The social implications of implementing this research are significant. By providing visually impaired individuals with a reliable and efficient navigation aid, we can greatly enhance their independence and quality of life. Navigating city environments can be challenging and hazardous for the visually impaired, leading to increased dependency and reduced mobility. Our solution aims to mitigate these challenges by empowering users to navigate confidently and autonomously. This fosters a more inclusive society where individuals with visual impairments can participate actively in urban mobility, employment, and social activities.

In the future, we plan to further enhance and refine our technology to better serve the needs of visually impaired individuals. This includes improving the accuracy and reliability of object recognition and scene understanding algorithms to provide more detailed and contextually relevant vocal cues. Additionally, we aim to explore novel sensor technologies and integration methods to expand the capabilities of our system, such as incorporating haptic feedback for enhanced spatial awareness.

Furthermore, we intend to conduct extensive user testing and feedback sessions to iteratively improve the usability and effectiveness of our solution. This user-centric approach will ensure that our technology meets the diverse needs and preferences of visually impaired users in various real-world scenarios.

Moreover, we are committed to collaborating with stakeholders, including advocacy groups, healthcare professionals, and technology companies, to promote the adoption and dissemination of our technology on a larger scale. By fostering partnerships and engaging with the community, we can maximize the positive impact of our research on the lives of visually impaired individuals worldwide.

- Published in CSE NEWS, Departmental News, News, Research News

Significant Advancement in Analytical Detection of NFZ by the Department of Chemistry and RARE Lab

The Department of Chemistry and RARE Lab are excited to announce a groundbreaking advancement in the field of analytical detection. Researchers Dr Rajapandiyan Panneerselvan, Asst. Professor and Ph.D scholars, Ms Arunima Jinachandran and Ms Jayasree Kumar have developed a novel method for detecting nitrofurazone (NFZ) using three-dimensional silver nanopopcorns (Ag NPCs) on a flexible polycarbonate membrane (PCM) in their paper “Silver nanopopcorns decorated on flexible membrane for SERS detection of nitrofurazone” published in Microchimica Acta. This innovative technique leverages the power of surface-enhanced Raman spectroscopy (SERS) to provide a highly sensitive and practical solution for detecting NFZ on various surfaces, including fish.

Nitrofurazone (NFZ) is an antibiotic commonly used in veterinary medicine that poses significant health risks if residues enter the food chain. Despite regulatory bans, its illegal use continues, necessitating highly sensitive detection methods. While effective, traditional methods such as high-performance liquid chromatography and mass spectrometry are often costly and labor-intensive. The new SERS-based method offers a more efficient and straightforward alternative.

Abstract

The synthesis of three-dimensional silver nanopopcorns (Ag NPCs) onto a flexible polycarbonate membrane (PCM) for the detection of nitrofurazone (NFZ) on fish surfaces by surface-enhanced Raman spectroscopy (SERS) is presented. The proposed flexible Ag-NPCs/PCM SERS substrate exhibits significant Raman signal intensity enhancement with a measured enhancement factor of 2.36 × 10^6. This enhancement is primarily attributed to the hotspots created on Ag NPCs, which include numerous nanoscale protrusions and internal crevices distributed across the surface. The detection of NFZ using this flexible SERS substrate demonstrates a low limit of detection (LOD) of 3.7 × 10^−9 M and uniform, reproducible Raman signal intensities with a relative standard deviation below 8.34%. The substrate also exhibits excellent stability, retaining 70% of its efficacy even after 10 days of storage. Notably, the practical detection of NFZ in tap water, honey water, and fish surfaces achieves LOD values of 1.35 × 10^−8 M, 5.76 × 10^−7 M, and 3.61 × 10^−8 M, respectively, highlighting its effectiveness across different sample types. The developed Ag-NPCs/PCM SERS substrate presents promising potential for the sensitive SERS detection of toxic substances in real-world samples.

Methodology

The synthesis involves creating silver nanopopcorns on a flexible polycarbonate membrane using a simple chemical method. The resulting Ag NPCs exhibit high surface roughness with numerous nanoscale features that enhance the Raman signal. This flexible substrate can easily collect samples from irregular surfaces without requiring extensive preparation.

This SERS substrate can detect NFZ in various real-world samples, including:

- Tap water

- Honey water

- Fish surfaces

The method’s sensitivity and ease of use make it a promising tool for ensuring food safety and monitoring environmental contaminants.

The Department believes this development will significantly impact public health by providing a reliable and accessible method for detecting harmful substances in the food chain.

- Published in Chemistry-news, Departmental News, News, Research News

Innovative System for Detection and Classification of Manufacturing Defects in PCB

Dr Ramesh Vaddi, Associate Professor & Head of the Department of Electronics and Communication Engineering, along with his PhD Scholar Mr A Vinod Kumar has developed a new system for real-time and accurate detection and classification of manufacturing defects in Printed Circuit Boards (PCBs). This groundbreaking invention has been filed and published with Application Number: 202441021739 in the Patent Office Journal.

Abstract

This study presents a new system for real-time detection and classification of defects in Printed Circuit Boards (PCBs), which are critical in electronic products and systems. It employs an efficient model with pre-trained weights to detect defects for enhanced quality control. The model is initially trained and fine-tuned on a computer and then deployed on a compact computing board. For real-time imaging, a high-definition USB camera is connected to the system, allowing direct defect identification without the need for external devices. The output is shown on a monitor, with the PCB image featuring clearly labelled boxes to indicate the type and location of defects. This method offers a streamlined approach to defect classification, helping to improve the quality control process in electronics manufacturing.

Explanation of the Research in Layperson’s Terms

This research focuses on finding defects in PCBs, which are essential for most electronic devices like computers and phones. The system uses a powerful computer model to quickly identify any defects in real time. The model is trained on a regular computer to recognise normal PCBs and various defects. Once ready, it is transferred to a small, efficient computer board. A camera captures images of the PCBs, and the system analyses these images to identify defects. The results are displayed on a screen, clearly marking where the defects are and what types they are. This helps companies quickly and accurately detect defects in their electronics manufacturing process, saving time, reducing waste, and improving product quality

Practical Implementation/Social Implications of the Research

The practical implementation of this research involves deploying a system for real-time detection and classification of defects in PCBs, essential components in nearly all electronic devices. Using advanced deep learning techniques, the system can quickly identify manufacturing defects early in the production process. This leads to significant improvements in quality control, reduced waste, and lower production costs. By improving quality control in electronics manufacturing, the system helps reduce electronic waste, a significant environmental concern. Early detection of defects also decreases the chances of faulty electronic products reaching consumers, enhancing safety and reducing the need for product recalls. The system’s efficiency and accuracy could lead to more reliable electronics, fostering greater consumer trust in electronic products. This, in turn, encourages companies to invest in higher-quality manufacturing processes, ultimately leading to a more sustainable and responsible electronics industry.

Collaborations

To develop this system, the research team first trained a computer model to recognise defects in PCBs. The training involved feeding the model a large dataset of PCB images, some with defects and some without. The model learned to identify common defects by analysing these examples. Once trained, the model was implemented in a real-time setting and integrated with equipment to inspect PCBs during production. The system used a camera to capture images of each PCB and applied the trained model to analyse these images for defects. Running in real-time, the system could immediately detect issues and alert the manufacturing team, allowing them to correct problems on the spot. This approach improved product quality, reduced the chances of defective electronics reaching consumers, sped up the quality control process, and reduced waste, making the manufacturing process more efficient.

Future Research Plans

The research team has outlined several future plans to enhance and expand their defect detection system for PCBs:

- Model Optimization: Refining the machine learning model to improve accuracy and speed, experimenting with different architectures and training techniques to boost performance.

- Expanded Defect Library: Gathering a more extensive dataset of PCB defects to enable the model to identify a wider range of issues, making the system more versatile for various manufacturing environments.

- Real-World Testing: Testing the system in a broader range of manufacturing settings to ensure robustness and adaptability, understanding performance in diverse scenarios, and fine-tuning for optimal results.

- Integration with Manufacturing Systems: Aiming to integrate the system with other manufacturing processes and technologies for seamless communication between defect detection and other quality control systems, enhancing overall workflow.

- Automation and Robotics: Exploring the use of automation and robotics to streamline the defect detection process, potentially leading to a more automated manufacturing line with reduced human intervention and errors.

- Collaboration and Partnerships: Planning to collaborate with more industry partners and academic institutions to accelerate research and development, gaining valuable insights and resources to advance the system.

- Published in Departmental News, ECE NEWS, News, Research News

SRM AP and MGIT: An Alliance Fostering Research Advancements in Drone Technology

The Centre for Drone Technology, SRM University-AP, has signed an MoU with MGIT Co. Ltd, South Korea, on May 09, 2024, to facilitate research, academic, and scientific knowledge exchange in Drone technology. The agreement to spearhead research and technology developments in drone applications was signed by Dr R Premkumar, Registrar of SRM University-AP, and Mr Jeong Woo-Chul, CEO of MGIT Co. Ltd, in the presence of Mr Yun Cheol-Hun, Assistant Manager, MGIT Co. Ltd, and Dr Pranav RT Peddinti, Assistant Professor, Dept. Civil Engineering.

This collaboration signifies a major leap forward in harnessing the power of cutting-edge drone technology to address real-world challenges. The MOU facilitates the university to have experts from MGIT deliver seminars, workshops, symposia, etc., to the students and faculty of SRM AP on drone technology, its allied technologies and its applications. Undergraduate and postgraduate students and doctoral scholars of the university will also have the opportunity to be part of collaborative research projects and internships that would hugely benefit them in enhancing their awareness of drone technology.

The scope of the MOU also extends to collaboration with MGIT for national and international research funding, eligible researchers from MGIT to enrol in various courses at SRM AP, and extending the university’s academic, research and technical facilities such as laboratories, library, etc to MGIT team for research purposes.

The MOU between SRM AP and MGIT explores innovative solutions, pushes the boundaries of possibility, and revolutionises the future of drone technology.

- Published in News

SRM Foundation Establish a Free Tuition Centre at Nidamarru

A free tuition centre was established in the Nidamarru village on Wednesday under the auspices of SRM Foundation. Dr R Premkumar, Registrar of SRM University-AP and Mrs Revathi Balakrishnan, Associate Director – Directorate of Student Affairs, jointly inaugurated the centre. Dr Premkumar addressed the students and their parents on this occasion, stating that “SRM Foundation of SRM University (Chennai), which is internationally renowned as a leading educational institution in teaching and research, is also active in social service schemes and programs in the states of Tamil Nadu and Andhra Pradesh. This tuition centre is a testament to the Foundation’s philosophy of development for the societal cause.”

The Foundation’s spokesperson, Mr Suresh Kannan, stated that the Foundation set up the first rural tuition centre to provide free education to rural students in Tamil Nadu. SRM AP Media Public Relations Manager Mr Venugopal Gangisetty further remarked that 22 tuition centres have been established in Tamil Nadu state, with 570 students being taught the curriculum. This is the first free tuition centre to be established in Andhra Pradesh.

Mr Elamaran, Senior Consultant of Puthiyathalaimurai Foundation, said that students studying in sixth to tenth standard from economically backward families will be taught free of charge at the tuition centre. Many students of the villages and their parents were overjoyed as this was a remarkable hope to guarantee quality education and support for their children. Ms Prathipati Sushma, a teacher at the tuition centre, thanked SRM Foundation for setting up the first tuition centre in Nidamarru and giving her the opportunity to teach. Sweets and notebooks were distributed to the students after the program. SRM AP Chief Liaison Officer Mr Poolla Ramesh Kumar, Assistant Manager Mr Ramachandra Reddy and the residents of Nidamarru village participated in this program.

- Published in Departmental News, News, student affairs news

MOU Signed with IGCAR for Cutting-edge Biomedical Research

“This is a remarkable opportunity for our students to enhance their fields of study, gain academic insights from expert scientists and participate in cutting-edge research projects at IGCAR. This will ensure that we at SRM AP nurture students with a great scientific temperament,” stated Prof. Manoj K Arora, Vice Chancellor of SRM University-AP on signing the MOU with IGCAR.

SRM University-AP has signed a Memorandum of Understanding (MOU) with the Indira Gandhi Centre for Atomic Research (IGCAR) at Kalpakkam, Tamil Nadu, to collaborate on academic and research projects in Biomedical Research, Disaster Management, and other domains. The MOU was signed by Dr B Venkatraman, Director-IGCAR and Prof. Manoj K Arora, Vice Chancellor, SRM University-AP in the presence of Dr Vidya Sundarrajan, Head PHRMD & QAD, IGCAR Kalpakkam, Mrs M Menaka, Head RAMS, RESD, SQRMG, IGCAR, Kalpakkam, Prof. Ranjit Thapa, Dean-Research, SRM AP and Dr K A Sunitha, Associate Professor, SRM AP.

The MoU underscores a mutually beneficial agreement between the two institutes. On the academic front, the MOU provides internship opportunities, research collaboration for projects and industry visits for the students and faculty of SRM AP. This ensures a knowledge transfer between the two organisations, promoting stellar growth in scientific and technological advancements.

SRM AP has already collaborated with IGCAR on a consultancy project in the pioneering field of Biomedical Research last year. The parties have successfully conducted health screening of over 1500 subjects in the Chengalpattu region in Tamil Nadu, with SRM Medical Hospital & Research Centre and AIIMS Mangalagiri as secondary collaborators. Upon the successful completion of the project, IGCAR and SRM University-AP further extend their association with an official MOU for academic and research collaborations. “The MOU with SRM University-AP for translational research will be a huge motivation for the young faculty and scholars to pursue breakthrough research in their scientific domains,” remarked Dr B Venkatraman, Director-IGCAR.

Both institutes plan to extend their collaborative health screening project to the state of Andhra Pradesh, focusing on the neighbouring villages of SRM AP. Dr K A Sunitha, Project Head from SRM University-AP, opines that this project aims not just the possibility of translational research but also research for the societal cause. This research enables us to understand the correlated factors that influence various health disorders. Prof. Ranjit Thapa, Dean-Research also stated his enthusiasm for the project and expanding their research ventures to other domains.

- Published in Departmental News, News, Research News

NITTTR and SRM AP Collaborate for Trainer Programme on Outcome-Based Education

SRM University-AP organised a “Train to Trainer Programme on Outcome-Based Education (OBE)” for the faculty of the varsity to ensure a comprehensive understanding and implementation of OBE principles across all academic programmes. The five-day programme, initiated on May 20, 2024, was conducted by trainers from the National Institute of Technical Teachers’ Training Research (NITTTR), Chandigarh. The programme was inaugurated in the presence of Prof. Bhola Ram Gurjar, Director of NITTTR (joined virtually), Prof. Maitreyee Dutta, Professor and Head of Information Management and Emerging Engineering Department, NITTTR, Dr Meenakshi Sood, Associate Professor CDC, NITTTR, Prof. Manoj K Arora, Vice Chancellor, SRM University-AP, Deans of all schools, Directors and Faculty members of the institute.

The programme comprised customised training sessions and modules that covered various aspects of OBE framework design such as Implementation, Challenges, Strategies, Best Practices, Case Studies, Student Testimonials, Impact Analysis, Future Scope, etc. Trainers from NITTTR – Prof. Rama Krishna, Prof. Maitreyee Dutta, Prof. Sandeep Singh Gill, Dr Meenakshi Sood, Dr Balwindar Singh, Er Amandeep Kaur delivered the sessions.

A total of 40+ faculty members were chosen to receive training from the NITTTR Team. These faculty will be the programme-wise coordinators and master trainers, who will then train the rest of the faculty in their respective programmes and departments. This systematic training ensured that every faculty member of the university had a comprehensive and in-depth understanding of OBE and its impact on the present education system.

- Published in Departmental News, News

A New Chapter Begins: Welcoming the Vibrant Leaders of Student Council 2024-25!

The Directorate of Student Affairs hosted a wonderful Valedictory Ceremony on May 15, 2024, marking a momentous occasion as the university bid farewell to the outgoing Student Council and welcomed the new core team.

The campus bid adieu to Preetam Vallabhaneni, Niruktha Vadlamudi, Sanjana Maini, and Laxman Bankupalle, the charismatic heads of the previous Student Council and applauded them for their phenomenal leadership. Their dedication to managing student needs, organising fests, and ensuring a vibrant campus life has set an incredible standard.

The baton has now been passed to a dynamic new team: Laxman Bankupalle, Nivedha Sriram, Ankith Reddy, and Rishabh Ranjan Ishwar, who will serve as the President, Vice President, General Secretary and Treasurer of Student Council 2024-25. The vibrant team is ready to take the reins and elevate the student experience even further. “With their remarkable capabilities, we are confident that they will continue to strengthen the bridge between the student community and university management”, stated Vice Chancellor Prof. Manoj Arora, as he welcomed the new student leaders. Associate Director-Student Affairs, Ms Revathi B exclaimed, “A new team brings in new energy, a new chapter”, as she perfectly captured the spirit of this transition.

The ceremony also recognised the outstanding contributions of all clubs, societies and committee members, SC members, NSS, CESR (Corporate Engagement and Social Responsibilities), sports teams, and volunteers. The event was graced by the presence of our esteemed Registrar, Dr R Premkumar, CFAO Ms Suma Nulu, Deans, Directors, Faculty and Students of the varsity.

- Published in Departmental News, News, student affairs news

An Inventive Navigation System for the Visually Impaired

The Department of Computer Science and Engineering is proud to announce that the patent titled “A System and a Method for Assisting Visually Impaired Individuals” has been published by Dr Subhankar Ghatak and Dr Aurobindo Behera, Asst Professors, along with UG students, Mr Samah Maaheen Sayyad, Mr Chinneboena Venkat Tharun, and Ms Rishitha Chowdary Gunnam. Their patent introduces a smart solution to help visually impaired people navigate busy streets more safely. The research team uses cloud technology to turn this visual information into helpful vocal instructions that the users can hear through their mobile phones. These instructions describe things like traffic signals, crosswalks, and obstacles, making it easier for them to move around independently, making way for an inclusive society.

Abstract

This patent proposes a novel solution to ease navigation for visually impaired individuals. It integrates cloud technology, computer vision algorithms, and Deep Learning Algorithms to convert real-time visual data into vocal cues delivered through a mobile app. The system employs wearable cameras to capture visual information, processes it on the cloud, and delivers relevant auditory prompts to aid navigation, enhancing spatial awareness and safety for visually impaired users.

Practical implementation/Social implications of the research

The practical implementation of the research involves several key components.

- Developing or optimising wearable camera devices that are comfortable and subtle for visually impaired individuals. These cameras should be capable of capturing high-quality real-time visual data.

- A robust cloud infrastructure is required to process this data quickly and efficiently using advanced computer vision algorithms and deep learning algorithms.

- Design and develop a user-friendly mobile application that delivers processed visual information as vocal cues in real-time. This application should be intuitive, customisable, and accessible to visually impaired users.

Fig.1: Schematic representation of the proposal

The social implications of implementing this research are significant. We can greatly enhance their independence and quality of life by providing visually impaired individuals with a reliable and efficient navigation aid. Navigating city environments can be challenging and hazardous for the visually impaired, leading to increased dependency and reduced mobility. The research aims to mitigate these challenges by empowering users to navigate confidently and autonomously. This fosters a more inclusive society where individuals with visual impairments can participate actively in urban mobility, employment, and social activities.

In the future, the research cohort plans to further enhance and refine technology to better serve the needs of visually impaired individuals. This includes improving the accuracy and reliability of object recognition and scene understanding algorithms to provide more detailed and contextually relevant vocal cues. Additionally, they aim to explore novel sensor technologies and integration methods to expand the capabilities of our system, such as incorporating haptic feedback for enhanced spatial awareness. Furthermore, we intend to conduct extensive user testing and feedback sessions to iteratively improve the usability and effectiveness of our solution. This user-centric approach will ensure that our technology meets the diverse needs and preferences of visually impaired users in various real-world scenarios.

Moreover, the team is committed to collaborating with stakeholders, including advocacy groups, healthcare professionals, and technology companies, to promote the adoption and dissemination of this technology on a larger scale. By fostering partnerships and engaging with the community, they can maximise the positive impact of their research on the lives of visually impaired individuals worldwide.

- Published in CSE NEWS, Departmental News, News, Research News