The Department of Computer Science and Engineering is proud to announce that the patent titled “A System and a Method for Assisting Visually Impaired Individuals” has been published by Dr Subhankar Ghatak and Dr Aurobindo Behera, Asst Professors, along with UG students, Mr Samah Maaheen Sayyad, Mr Chinneboena Venkat Tharun, and Ms Rishitha Chowdary Gunnam. Their patent introduces a smart solution to help visually impaired people navigate busy streets more safely. The research team uses cloud technology to turn this visual information into helpful vocal instructions that the users can hear through their mobile phones. These instructions describe things like traffic signals, crosswalks, and obstacles, making it easier for them to move around independently, making way for an inclusive society.

Abstract

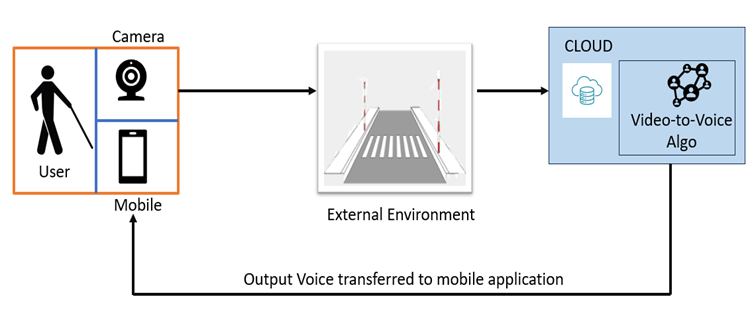

This patent proposes a novel solution to ease navigation for visually impaired individuals. It integrates cloud technology, computer vision algorithms, and Deep Learning Algorithms to convert real-time visual data into vocal cues delivered through a mobile app. The system employs wearable cameras to capture visual information, processes it on the cloud, and delivers relevant auditory prompts to aid navigation, enhancing spatial awareness and safety for visually impaired users.

Practical implementation/Social implications of the research

The practical implementation of the research involves several key components.

- Developing or optimising wearable camera devices that are comfortable and subtle for visually impaired individuals. These cameras should be capable of capturing high-quality real-time visual data.

- A robust cloud infrastructure is required to process this data quickly and efficiently using advanced computer vision algorithms and deep learning algorithms.

- Design and develop a user-friendly mobile application that delivers processed visual information as vocal cues in real-time. This application should be intuitive, customisable, and accessible to visually impaired users.

Fig.1: Schematic representation of the proposal

The social implications of implementing this research are significant. We can greatly enhance their independence and quality of life by providing visually impaired individuals with a reliable and efficient navigation aid. Navigating city environments can be challenging and hazardous for the visually impaired, leading to increased dependency and reduced mobility. The research aims to mitigate these challenges by empowering users to navigate confidently and autonomously. This fosters a more inclusive society where individuals with visual impairments can participate actively in urban mobility, employment, and social activities.

In the future, the research cohort plans to further enhance and refine technology to better serve the needs of visually impaired individuals. This includes improving the accuracy and reliability of object recognition and scene understanding algorithms to provide more detailed and contextually relevant vocal cues. Additionally, they aim to explore novel sensor technologies and integration methods to expand the capabilities of our system, such as incorporating haptic feedback for enhanced spatial awareness. Furthermore, we intend to conduct extensive user testing and feedback sessions to iteratively improve the usability and effectiveness of our solution. This user-centric approach will ensure that our technology meets the diverse needs and preferences of visually impaired users in various real-world scenarios.

Moreover, the team is committed to collaborating with stakeholders, including advocacy groups, healthcare professionals, and technology companies, to promote the adoption and dissemination of this technology on a larger scale. By fostering partnerships and engaging with the community, they can maximise the positive impact of their research on the lives of visually impaired individuals worldwide.