Recent News

- Two paper presentations at international research conferences August 1, 2022

Presenting papers at international research conferences helps hone the research questions. Students from the Department of Computer Science and Engineering have attended two international research conferences and presented their papers drafted under the supervision of Assistant Professor V M Manikandan.

The International Conference on Emerging Frontiers in Electrical and Electronic Technologies (ICEFEET- 2022), organised by NIT Patna, India, was held from June 24 to 25. At the conference, BTech students Nitesh Bharti and Mohit Kumar presented the paper A Hybrid System with Number Plate Recognition and Vehicle Type Identification for Vehicle Authentication at the Restricted Premises. The work was composed under the guidance of Assistant Professor Dr V M Manikandan. The paper will soon be published in IEEE Xplore Digital Library (Scopus indexed). In the future, they plan to integrate the proposed algorithms with proper hardware units to completely automate the authentication of vehicles in restricted areas. The proposed computer vision-based systems can be used in restricted areas to ensure the entry of authenticated vehicles.

The International Conference on Emerging Frontiers in Electrical and Electronic Technologies (ICEFEET- 2022), organised by NIT Patna, India, was held from June 24 to 25. At the conference, BTech students Nitesh Bharti and Mohit Kumar presented the paper A Hybrid System with Number Plate Recognition and Vehicle Type Identification for Vehicle Authentication at the Restricted Premises. The work was composed under the guidance of Assistant Professor Dr V M Manikandan. The paper will soon be published in IEEE Xplore Digital Library (Scopus indexed). In the future, they plan to integrate the proposed algorithms with proper hardware units to completely automate the authentication of vehicles in restricted areas. The proposed computer vision-based systems can be used in restricted areas to ensure the entry of authenticated vehicles.Explanation of the research

Vehicle detection and number plate recognition approaches have been widely studied in recent years due to their wide applications. The research paper proposes a framework to ensure the entry of authorised vehicles in restricted areas such as University campuses, townships, etc., where the researchers are expecting the entry of a set of authorized vehicles. Certainly, unauthorised vehicles might be allowed to enter those areas after proper verification by the concerned people responsible for ensuring security. In the proposed approach, the admin should register all the authorised vehicles in a system with the essential attributes such as vehicle number, type, etc. A surveillance camera placed at the entrance will capture live videos. When a vehicle is in the camera view, the image frames will be passed to an automatic number plate recognition module. The number plate recognition module will identify the same and be matched with the details in the database to authorise the vehicle. This manuscript proposes a real-time and reliable approach for detecting and recognising license plates based on morphology and template matching. To ensure the system’s reliability, a frame selection module will select the image frames with high quality, and even to improve the number plate recognition accuracy, the image will be enhanced using image enhancement techniques such as histogram equalisation. The image enhancement techniques will help to provide better results even though the videos are taken in low lighting conditions. Further, we ensure that the vehicle type matches the number present in the database to prevent unauthorised access using fake number plates. The experimental study is conducted using videos taken under various environmental conditions such as lighting, slope, distance, and angle.

Jahnavi Kolli presented her research paper, An Efficient Face Recognition System for Person Authentication with Blur Detection and Image Enhancement, at the International Conference on Sustainable Technology for Power and Energy Systems (STPES). The conference was organised by NIT Srinagar and IIT Jammu, India, and was held from July 4 to 8, 2022. The research work was monitored by Assistant Professor V M Manikandan and done in collaboration with Professor Yu-Chen Hu, Providence University, Taiwan. The proposed computer face recognition systems can be used to record the attendance of students in class or employees in the office in an easy way. In the future, the researchers plan to improve the face recognition systems, which will perform better when the images are captured using low-resolution cameras or the face regions occluded for some reasons.

Jahnavi Kolli presented her research paper, An Efficient Face Recognition System for Person Authentication with Blur Detection and Image Enhancement, at the International Conference on Sustainable Technology for Power and Energy Systems (STPES). The conference was organised by NIT Srinagar and IIT Jammu, India, and was held from July 4 to 8, 2022. The research work was monitored by Assistant Professor V M Manikandan and done in collaboration with Professor Yu-Chen Hu, Providence University, Taiwan. The proposed computer face recognition systems can be used to record the attendance of students in class or employees in the office in an easy way. In the future, the researchers plan to improve the face recognition systems, which will perform better when the images are captured using low-resolution cameras or the face regions occluded for some reasons.Explanation of the research

The recent advancements in technology widely help to substitute manpower with machines in a better way. Even though machines are increasingly replacing humans in various ways, there are still a few areas where the use of machines still needs to be explored much more efficiently. Facial recognition systems are one such field. Facial recognition systems are used with various motives, such as identification of suspects in public places, authentication of users on restricted premises, etc. In this work, we propose a facial recognition system to facilitate the authentication of students at the university entrance. The same scheme can also be utilised to authenticate the students before entering examination halls. As the strength of the students at universities increases in a more significant way, it becomes strenuous for the security people to record their attendance manually, which frequently results in erroneous data. This paper proposes a facial recognition system that will help to capture the live videos from an area of interest and identify the faces. Further, a face recognition scheme will detect whether the person is authorised or not. Several facial recognition systems are already available in the literature, and this scheme differs from them in many ways. The proposed method selects the frames with less blur for face detection and further face recognition. A blur detection scheme is used in the proposed system to analyse the amount of blur in the image. To overcome the challenges such as low accuracy during face recognition when the images are taken in low lighting conditions, we use a histogram equalization method to enhance the quality. The experimental study shows that the proposed approach works well in real-time.

Continue reading → - Watermarking medical images for their secure transmission July 28, 2022

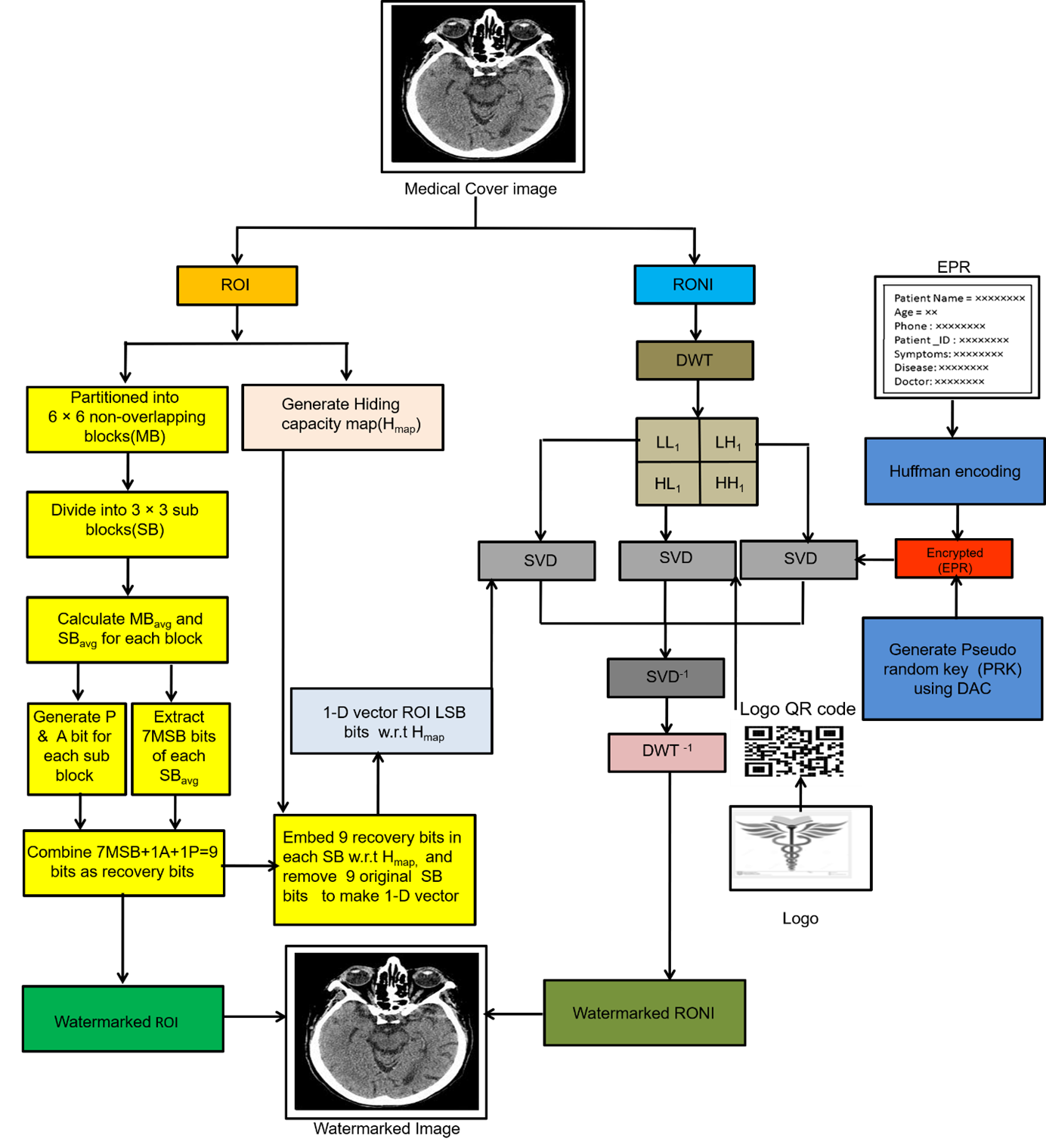

The Department of Computer Science and Engineering is proud to announce that Dr Priyanka, Assistant Professor, and her research Scholar, Ms Kilari Jyothsna Devi have published their patent (application no: 202241033779 A), “A System and a Method for Watermarking Medical Images for the Secure Transmission of Images”. The patent was published on June 17, 2022, by the Indian Patent Office.

In the present technology, medical images and patient information are widely transmitted through a public transmission channel in e-healthcare applications. While sharing medical images or electronic patient records (EPR) through a public network, they can get tampered with or manipulated, leading to wrong diagnosis by the medical consultants. Similarly, one can easily claim false ownership of the medical images. This makes the confidentiality of the patient record at low cost a major concern.

The proposed novel MIW scheme ensures most of the watermarking characteristics such as high imperceptibility, robustness, security with low computational cost, temper detection and recovery in medical image transmission in real-time healthcare applications. In the future, they intend to design digital image watermarking schemes for the secure transmission of images over blockchain and cloud-based applications.

Continue reading →