Recent News

- Enhancing Vehicle Security with Blockchain and Hybrid Computing October 15, 2024

Dr Sriramulu Bojjagani, Assistant Professor, Department of Computer Science and Engineering and his research scholar, Ms Praneeta Supraneni, have proposed a secure and novel way to safeguard cars from being hacked, data breaches, and unauthorised access. Their research paper titled “Handover-Authentication Scheme for the Internet of Vehicles (IoV) using Blockchain and Hybrid Computing” will now improve transparency and traceability of your cars. Read the interesting abstract to learn more!

Dr Sriramulu Bojjagani, Assistant Professor, Department of Computer Science and Engineering and his research scholar, Ms Praneeta Supraneni, have proposed a secure and novel way to safeguard cars from being hacked, data breaches, and unauthorised access. Their research paper titled “Handover-Authentication Scheme for the Internet of Vehicles (IoV) using Blockchain and Hybrid Computing” will now improve transparency and traceability of your cars. Read the interesting abstract to learn more!Abstract:

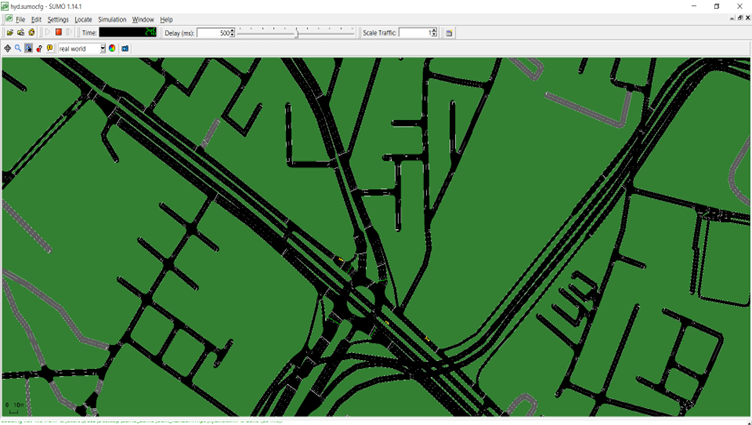

The advancements in telecommunications are significantly benefiting the Internet of Vehicles (IoV) in various ways. Minimal latency, faster data transfer, and reduced costs are transforming the landscape of IoV. While these advantages accompany the latest improvements, they also expand cyberspace, leading to security and privacy concerns. Vehicles rely on trusted authorities for registration and authentication processes, resulting in bottleneck issues and communication delays. Moreover, the central trusted authority and intermediate nodes raise doubts regarding transparency, traceability, and anonymity. This paper proposes a novel vehicle authentication handover framework leveraging blockchain, IPFS, and hybrid computing. The framework uses a Proof of Reputation (PoR) consensus mechanism to improve transparency and traceability and the Elliptic Curve Cryptography (ECC) cryptosystem to reduce computational delays. The suggested system assures data availability, secrecy, and integrity while maintaining minimal latency throughout the vehicle re-authentication process. Performance evaluations show the system’s scalability, with creating keys, encoding, decoding, and registration operations done rapidly. Simulation is performed using SUMO to handle vehicle mobility in IoV environment. The findings demonstrate the practicality of the proposed framework in vehicular networks, providing a reliable and trustworthy approach for IoV communication

Practical Implementation / Social Implications:

The practical application of this research can significantly improve the safety and reliability of autonomous vehicles and connected vehicle networks. By securing the handover process, it reduces the risk of hacking, data breaches, and unauthorized access, making connected vehicle systems safer for the public and contributing to the development of smart transportation infrastructures.

Future Research Plans:

Moving forward, we plan to focus on optimizing blockchain solutions for large-scale IoT and smart city applications, with a particular interest in improving consensus mechanisms and security protocols for real-time operations, such as autonomous driving and smart energy grids.

Continue reading →

- Mr Ratna Raju Publishes Research on UAV-Assisted Service Caching October 14, 2024

Mr M Ratna Raju, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone by publishing a research paper titled “Service caching and user association in cache-enabled multi-UAV assisted MEN for latency-sensitive applications” in the esteemed Q1 journal, Computers and Electrical Engineering, which boasts an impact factor of 4.0.

Mr M Ratna Raju, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone by publishing a research paper titled “Service caching and user association in cache-enabled multi-UAV assisted MEN for latency-sensitive applications” in the esteemed Q1 journal, Computers and Electrical Engineering, which boasts an impact factor of 4.0.

The paper explores innovative strategies for improving service caching and user association in multi-unmanned aerial vehicle (UAV) networks, addressing challenges faced by latency-sensitive applications. Mr Raju’s research contributes significantly to the field of computer science, particularly in enhancing the efficiency of UAV-assisted networks.This publication not only highlights Mr Raju’s dedication to cutting-edge research but also reinforces SRM University-AP’s commitment to fostering academic excellence and innovation in technology. As the demand for efficient communication networks continues to grow, findings from this study are poised to play a critical role in shaping the future of network architecture and UAV applications.

The academic community and students alike look forward to Mr Raju’s further contributions as he continues to lead impactful research initiatives at SRM University-AP.Abstract of the Research

The evolution of 5G (Fifth Generation) and B5G (Beyond 5G) wireless networks and edge IoT (Internet of Things) devices generates an enormous volume of data. The growth of mobile applications, such as augmented reality, virtual reality, network gaming, and self-driving cars, has increased demand for computation-intensive and latency-critical applications. However, these applications require high computation power and low communication latency, which hinders the large-scale adoption of these technologies in IoT devices due to their inherent low computation and low energy capabilities.

MEC (mobile edge computing) is a prominent solution that improves the quality of service by offloading the services near the users. Besides, in emergencies where network failure exists due to natural calamities, UAVs (Unmanned Aerial Vehicles) can be positioned to reinstate the networking ability by serving as flying base stations and edge servers for mobile edge networks. This article explores computation service caching in a multi-unmanned aerial vehicle-assisted MEC system. The limited resources at the UAV node induce added problems of assigning the existing restricted edge resources to satisfy the user requests and the associate of users to utilise the finite resources effectively. To address the above-mentioned problems, we formulate the service caching and user association problem by placing the diversified latency-critical services to maximise the time utility with the deadline and resource constraints.

The problem is formulated as an integer linear programming (ILP) problem for service placement in mobile edge networks. An approximation algorithm based on the rounding technique is designed to solve the formulated ILP problem. Moreover, a genetic algorithm is designed to address the larger instance of the problem. Simulation results indicate that the proposed service placement schemes considerably enhance the cache hit ratio, load on the cloud and time utility performance compared with existing mechanisms.

Explanation of the Research in Layperson’s Terms

The rapid growth of 5G (Fifth Generation) and B5G (Beyond 5G) wireless networks, along with edge IoT (Internet of Things) devices, is creating a massive amount of data. As mobile applications like augmented reality (AR), virtual reality (VR), online gaming, and self-driving cars become more popular, there’s a greater need for fast, powerful computing. However, IoT devices typically have limited computing power and energy, making it hard to run these advanced applications. Mobile Edge Computing (MEC) offers a solution to this problem by offloading tasks to servers located closer to users, reducing delays and improving performance.

In cases of emergency where network failure occurs due to natural disasters, Unmanned Aerial Vehicles (UAVs) can be used to restore connectivity. UAVs can act as flying base stations and edge servers, helping mobile edge networks continue functioning. This research focuses on improving how computation services are cached and handled in a system that uses multiple UAVs to assist MEC. Since UAVs have limited resources, there’s a challenge in efficiently assigning these resources to meet user demands. The research proposes a solution by formulating this problem as an integer linear programming (ILP) problem, aiming to place services in a way that maximises performance while considering deadlines and resource limits. To solve this complex issue, we use two approaches. First, they apply an approximation algorithm based on a rounding technique to solve the ILP problem. Then, for larger problems, they use a genetic algorithm. Their simulation results show that these service placement strategies significantly improve metrics like cache hit ratio, load reduction on the cloud, and time utility, compared to existing methods.

Practical Implementation and the Social Implications Associated

The practical implementation of this research lies in enhancing the efficiency of real-time, computation-intensive applications like augmented reality (AR), virtual reality (VR), autonomous driving, and network gaming in mobile edge computing (MEC) environments, particularly in scenarios involving Unmanned Aerial Vehicles (UAVs). By optimizing how services are cached and distributed in a multi-UAV-assisted MEC system, the research enables faster data processing and lower latency, which is crucial for applications where even slight delays can cause major issues, such as in self-driving cars or real-time remote surgeries. In emergency situations, such as natural disasters, where ground-based networks may be damaged or overloaded, the deployment of UAVs as flying base stations and edge servers could restore network connectivity quickly and provide essential services. This research ensures that even under such constraints, services are efficiently distributed, enhancing responsiveness and reliability.

Social Implications:

Disaster Relief: UAVs with MEC support could be deployed during natural calamities to restore communication services, helping rescue teams coordinate better and saving lives.

Smart Cities and Autonomous Vehicles: The work contributes to making smart cities more responsive, with real-time data processing and seamless service delivery. Autonomous vehicles, for instance, would benefit from reduced latency, leading to safer and more efficient navigation.

Healthcare: Applications such as telemedicine and remote surgery could operate more effectively with lower latency, improving healthcare delivery in remote or disaster-affected regions.Collaborations

1. Manoj Kumar Somesula and Banalaxmi Brahma from Dr. B. R. Ambedkar National Institute of Technology Jalandhar, Punjab 144008, India.

2. Mallikarjun Reddy Dorsala from Indian Institute of Information Technology Sri City, Chittoor, Andhra Pradesh 517646, India.

3. Sai Krishna Mothku from National Institute of Technology, Tiruchirappalli 620015, IndiaFuture Research Plans

In future, we plan to consider the unique challenge of making caching decisions while accounting for the limited energy capacity of UAVs, mobility of UAVs, network resources, and service dependencies, which introduces new complexities in algorithm design minimising the overall service delay while adhering to constraints such as energy consumption, UAV mobility, and network resources. This would require the joint optimisation of service caching placement, UAV trajectory, UE-UAV association, and task offloading.

Continue reading →